Abstract

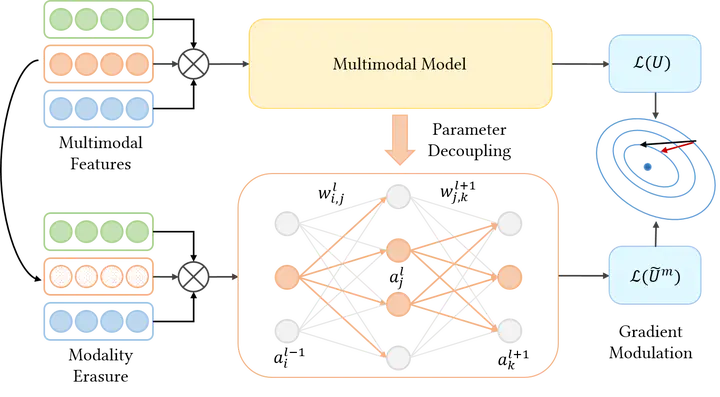

Emotion recognition in conversation aims to identify the emotions underlying each utterance, and it has great potential in various domains. Human perception of emotions relies on multiple modalities, such as language, vocal tonality, and facial expressions. While many studies have incorporated multimodal information to enhance emotion recognition, the performance of multimodal models often plateaus when additional modalities are added. We demonstrate through experiments that the main reason for this plateau is an imbalanced assignment of gradients across modalities. To address this issue, we propose fine-grained adaptive gradient modulation, a plug-in approach to rebalance the gradients of modalities. Experimental results show that our method improves the performance of all baseline models and outperforms existing plug-in methods.